Where next for SEO?

In my recent post on Copify and content mills, I suggested that the current vogue for pumping out reams of low-grade content in order to generate backlinks and/or attract natural traffic could not last. In this post, I’d like to expand further on that point, focusing on the issues facing natural search right now and what the future might hold.

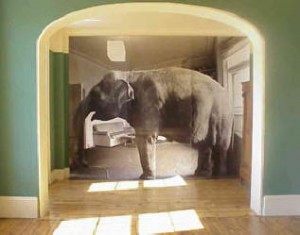

The elephant in the room

Thank heavens we fitted that laminate flooring

An ‘elephant in the room’ is an inconvenient but hugely significant truth that no one wants to acknowledge. For SEO right now, that elephant is the unsustainability of current search-marketing practices.

The truth is that the long-term viability of the whole search paradigm (site publishes, user searches, user finds) simply isn’t served by the things many search marketers do: article marketing, online PR and ‘SEO fodder’.

While the music plays, we’re still dancing

All these tactics do is soak up resources to deliver a temporary advantage that a competitor can easily reverse by pursuing exactly the same strategy (even using almost identical content). On the downside, they clog up the internet with spam, degrade the internet experience and make it ever harder for the ‘proper’ search experience to take place. It’s a classic case of the tragedy of the commons.

The parallels with the financial crisis are striking. Far from ‘sleepwalking into disaster’, many senior financiers were fully aware that their business practices would be damaging over the long term – but the short-term profits were just too attractive to ignore. ‘When the music stops, in terms of liquidity, things will be complicated,’ said Chuck Prince, Citibank CEO, in 2007. ‘But as long as the music is playing, you got to get up and dance. We’re still dancing.’

Indefinite articles

Search marketers would certainly leave the dancefloor quick smart if Google’s search algorithm reduced the weight attached to content published at article and online PR sites.

It’s been a long time since Google respected paid links. Yet a link from Ezine Articles or another article site is effectively a paid link – but purchased with content rather than cash. You give Ezine some content, you get a backlink. It’s a transaction. For PR sites, submission fees for the sites that can deliver the most backlinks make the nature of the deal even more explicit.

Online directories with submission fees are doing a similar thing. But the nature of the relationship between client and site is much clearer – plus you can only have one backlink from each directory, not keep plugging away indefinitely.

Since Google respects article and PR links, it’s simply a case of putting in the hours to create adequate content and ‘spinning’ it across as many sites as you dare.

Yes, there are quality standards, but they’re not particularly exacting. The sanity check is ‘value for users’. Give me ten minutes and I’ll find you ten articles – on almost any subject – that add no value because they are corporate puff, embarrassingly basic or near-duplicates of other articles.

The other main way of ‘gaming’ Google is by creating banks of SEO fodder: big chunks of content that is nominally relevant but actually not that valuable to users. Since Google can’t gauge the human value of content (yet), it sees this as worthy content and often ranks it quite highly.

The cynicism of all this is well known by anyone with the slightest acquaintance with search marketing. Yet we’re still recommending it to our clients – because as long as Google works as it does, it gets results.

But that could change. We’re unlikely to see existing article links deprecated, but it seems inevitable that new links will be gradually downgraded until they’re weighted appropriately. SEO fodder represents a tougher challenge for Google.

Dark satanic mills

To sate the voracious content appetites of article, PR and SEO marketers, we’re now seeing the advent and growth of so-called ‘content mills’ or ‘word factories’, which offer a highly cost-effective way to obtain large quantities of (allegedly) optimised text. Clients pay by the word, and obtain ready-made web content that they can use for their SEO campaigns. I’ve covered the drawbacks for clients here so I won’t repeat myself.

This AdWeek article argues that content mills are one of the key growth areas in digital marketing for 2010. Maybe so, but it’s going to be a case of making hay while the sun shines. Competition will force low prices even lower, while a game-changing new Google algorithm that reduces the efficacy of content spam will result either in fewer customers (why bother?) or lower prices again (why overpay for weak links?).

Eating sawdust

As a result of all this, the internet is filling up with unreadable rubbish, damaging the searching and browsing experience for us all, as this post vividly argues. Even the AdWeek article referenced above acknowledges the point:

‘The question for 2010 is whether this automation and data-driven approach will lead to a flowering of useful information or more detritus clogging search results with low-grade, ad-heavy Web pages.’

That is indeed the question for 2010. And my money’s on the detritus, because web publishers do not presently see any value or profit in providing truly useful information – and search marketers are doing little to persuade them otherwise.

Some observers (such as Carson Brackney in this post) argue that there’s a place for lower-quality writing, and that web users aren’t as fussy or demanding as self-regarding copywriters would like them to be. Often, a food analogy is used: sometimes you like steak, but other times a burger will do.

For me, this is disingenuous. SEO pages are created purely for search purposes, with no thought of providing any value to the reader. SEO content differs from ‘proper’ web content not by degree, but by nature: it’s not a cut-price equivalent, but a completely different animal. Again, honest search marketers will admit this.

Reading SEO spam is more like eating sawdust than munching a burger: it will fill you up, but there is literally no enjoyment or nutrition to be gained from it – because it was never intended for human consumption.

Who could argue, with a straight face, that anyone is going to get anything out of an article like this? And more to the point, do the search benefits for the firm involved really outweigh the reputational damage of having this sort of rubbish associated with their brand?

Semantic search

So the webwaves are choked with SEO flotsam and jetsam. Somehow, search has to get more sophisticated, to filter out the rubbish – or users will lose faith. And Google, though a mighty corporation, ultimately depends on users’ faith in the accuracy and usefulness of its results.

One option is a form of semantic search, where Google actually comprehends the meaning of content rather than simply analysing it with metrics such as keyword density. This could be applied to website content or backlinking pages. However, at present, it’s a long way off.

There are tools (such as this one for Twitter) that attempt to bring a basic level of semantic search to social media. However, as you’ll quickly discover if you give it a go, there’s more to analysing the emotions of a piece of writing than categorising particular trigger words into ‘positive’ and ‘negative’. We have a long way to go before machines understand that ‘good riddance’ is a negative sentiment and ‘killer post’ a positive one.

Social search

Another option for improving search is some kind of link-up with social media – seemingly a ready-made source of user opinion that could be used to shape search results. All Google has to do is find a way of mining the goodwill being expressed at SM sites every day. Instead of viewing backlinks as ‘votes’ on the quality of online content, it can use SM sentiment as a measure of what people think of a site or page.

Retweets are a good example of a ‘goodwill meter’. Although they could theoretically be paid for, RTs are one of the purest online votes of confidence there is. If my article gets tweeted, a human being thinks it’s valuable. Google already uses Digg links as a measure of popularity, so this seems like a natural next step.

Efficient refinery

One way of proactively digging out better results is by refining your search criteria, narrowing your focus down to filter out some of the rubbish. At present, it’s incumbent on the user themselves to try and refine their search by adding additional keywords or trying new ones.

Google knows that it has to guide users towards finer searches one way or another, but the lack of prominence it gives to its ‘related searches’ and ‘wonder wheel’ suggests that it only half-believes in them. It might have to do more in the future to develop tools that allow rapid, intuitive refining of results, including (perhaps) one-click filters to eliminate blog, article and PR postings.

Wait and see

Whatever the future brings, it’s going to be fascinating. Google’s success depends on providing useful, unspammy search results, so we can be sure that some sort of change will come. And whatever it is, it’s surely going to change the face of search marketing completely over the next five years.

Tags: article marketing, Copify, Digital and social, online PR, semantic search, SEO